| frog nearest neighbors | Litoria | Leptodactylidae | Rana | Eleutherodactylus |

|---|

We provide an implementation of the GloVe model for learning word representations. Please see the project page for more information.

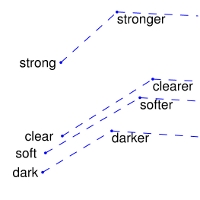

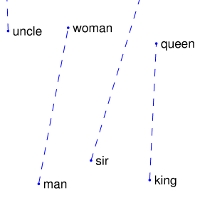

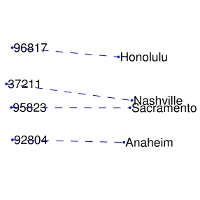

man -> woman | city -> zip | comparative -> superlative

:-------------------------:|:-------------------------:|:-------------------------:|:-------------------------:|

Pre-trained word vectors are made available under the Public Domain Dedication and License

- Wikipedia 2014 + Gigaword 5 (6B tokens, 400K vocab, uncased, 50d, 100d, 200d, & 300d vectors, 822 MB download): glove.6B.zip

- Common Crawl (42B tokens, 1.9M vocab, uncased, 300d vectors, 1.75 GB download): glove.42B.300d.zip

- Common Crawl (840B tokens, 2.2M vocab, cased, 300d vectors, 2.03 GB download): glove.840B.300d.zip

- Twitter (2B tweets, 27B tokens, 1.2M vocab, uncased, 25d, 50d, 100d, & 200d vectors, 1.42 GB download): glove.twitter.27B.zip Ruby script for preprocessing Twitter data

$ git clone http://github.com/stanfordnlp/glove

$ cd glove && make

$ ./demo.sh

The demo.sh scipt downloads a small corpus, consisting of the first 100M characters of Wikipedia. It collects unigram counts, constructs and shuffles cooccurrence data, and trains a simple version of the GloVe model. It also runs a word analogy evaluation script in python. Continue reading for further usage details and instructions for how to run on your own corpus.

This package includes four main tools:

Constructs unigram counts from a corpus, and optionally thresholds the resulting vocabulary based on total vocabulary size or minimum frequency count. This file should already consist of whitespace-separated tokens. Use something like the Stanford Tokenizer (http://nlp.stanford.edu/software/tokenizer.shtml) first on raw text.

Constructs word-word cooccurrence statistics from a corpus. The user should supply a vocabulary file, as produced by 'vocab_count', and may specify a variety of parameters, as described by running './build/cooccur'.

Shuffles the binary file of cooccurrence statistics produced by 'cooccur'. For large files, the file is automatically split into chunks, each of which is shuffled and stored on disk before being merged and shuffled togther. The user may specify a number of parameters, as described by running './build/shuffle'.

Train the GloVe model on the specified cooccurrence data, which typically will be the output of the 'shuffle' tool. The user should supply a vocabulary file, as given by 'vocab_count', and may specify a number of other parameters, which are described by running './build/glove'.

All work contained in this package is licensed under the Apache License, Version 2.0. See the include LICENSE file.