Welcome to OmniMind, the world’s first AGI project that can do anything you need. This isn’t just another open-source repository—it’s a grand, community-powered revolution that solves real-world problems by harnessing a universal intelligence. We’re building a platform where every developer, researcher, and curious mind can contribute, iterate, and build a truly adaptive AGI that continuously learns and evolves.

“Invest your time in OmniMind—where groundbreaking ideas merge with practical execution to shape the future of AI.”

- Overview

- Why Invest Your Time

- Unique Problem Solving & Use Cases

- How OmniMind Works

- Backend Architecture & File Structure

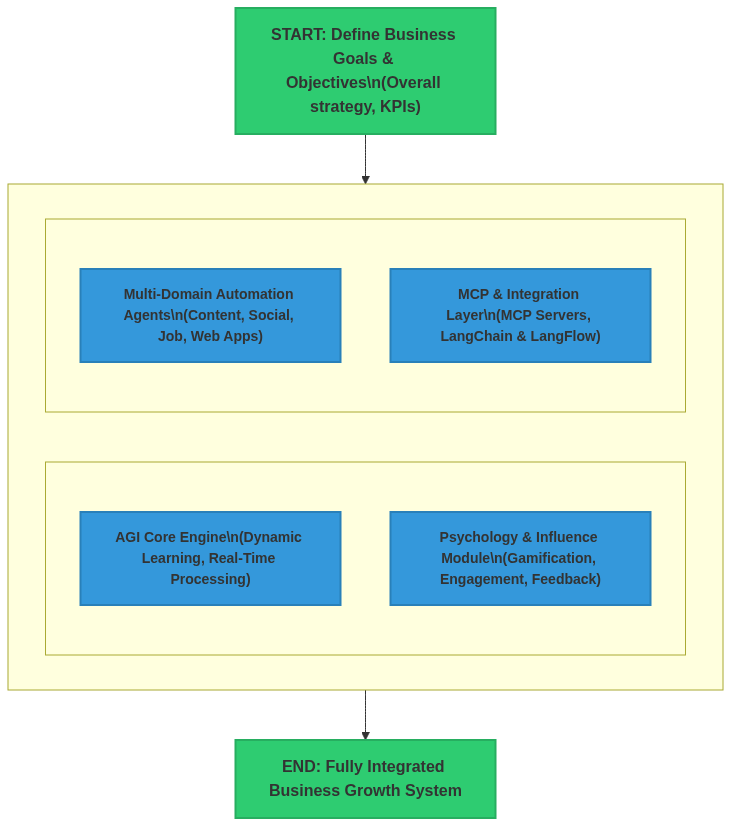

- Modules & System Architecture

- Installation & Setup

- Usage

- Contributing

- Credits & Acknowledgments

- License

- Last Updated

OmniMind is a revolutionary project that transcends the limits of narrow AI. It is designed to be a universal intelligence—capable of executing any task, automating processes across multiple domains, and solving complex real-world challenges. Built with a blend of modern frameworks and cutting-edge AI technologies, OmniMind integrates MCP servers, LangChain, LangFlow, AI agents, Pipedream, React, Python, Node.js, Django, and FastAPI. Moreover, it supports all major LLM models (from GPT and Claude to Gemini, PaLM, LaMDA, and beyond) and is fully compatible with all MCP clients and servers as showcased in the awesome MCP-servers repository.

- Universal Utility: OmniMind is built to handle a wide range of tasks—from content creation and social media automation to complex data analytics and project management. It’s not just theory; it’s a fully operational AGI framework.

- Practical Impact: Whether you’re streamlining business operations or innovating in AI research, OmniMind provides practical, scalable solutions that reduce time, effort, and cost.

- Future-Proof Investment: By contributing to OmniMind, you’re joining a community that is shaping the future of technology. Your contributions are not only valuable today—they build the foundation for tomorrow’s breakthroughs.

- Collaborative Growth: OmniMind is an open platform where your ideas, code, and feedback directly influence the evolution of a true AGI.

- Learning & Sharing: Work alongside expert developers and enthusiasts, exchange knowledge, and gain firsthand experience with state-of-the-art AI systems.

- Open Impact: Your involvement helps create a system that benefits society at large, democratizing advanced AI for everyone.

OmniMind isn’t just about theoretical capability—it solves real, pressing problems. Here’s an example use case that illustrates its power:

Imagine simply saying:

"Create a comprehensive analytics dashboard for my e-commerce store."

Behind the Scenes:

- Command Processing:

The agent parses your command and identifies key actions like data aggregation, visualization, and performance monitoring. - MCP Request Generation:

An MCP (Model Communication Protocol) request is dynamically generated. It defines the application (e.g., a dashboard service), action (e.g.,create_dashboard), and parameters (e.g., metrics like conversion rates, traffic sources, sales figures). - Adapter Routing:

The MCP Layer routes the request to our dedicated e-commerce analytics adapter, which translates it into API calls for data sources (e.g., sales databases, Google Analytics). - Real-Time Integration:

The response is processed in real time, and our backend pushes a confirmation notification to your dashboard via real-time channels (e.g., Supabase or WebSocket). - Outcome:

Within moments, a fully functional analytics dashboard is deployed—tailored to your store’s data, interactive, and continuously updated.

This is just one example of how OmniMind translates natural language commands into complex, automated workflows—demonstrating its capability to simplify and solve challenging problems.

- Natural Language Processing:

Leverages GPT-4, BERT, and other models to understand and process user commands. - MCP Integration:

Uses MCP servers to standardize communication between AI agents and external APIs. - Dynamic Agent Orchestration:

Agents built on LangChain and LangFlow work together to execute tasks across multiple domains. - Real-Time Feedback & Automation:

Integrates Pipedream, Node.js, and FastAPI to deliver real-time processing and updates.

Figure: OmniMind processes a natural language command into a series of automated actions, from command parsing to real-time execution.

The backend of OmniMind is designed for scalability and maintainability, built primarily with Node.js/Express and Python for AI-specific tasks. Here’s a glimpse of the structure:

OmniMind/

├── backend/

│ ├── src/

│ │ ├── controllers/

│ │ │ ├── agiController.js # Processes AGI commands

│ │ │ ├── authController.js # Manages user authentication

│ │ ├── models/

│ │ │ ├── user.js # User schema and methods

│ │ │ ├── task.js # Task management schema

│ │ ├── routes/

│ │ │ ├── agiRoutes.js # Routes for AGI engine endpoints

│ │ │ ├── authRoutes.js # Authentication endpoints

│ │ ├── services/

│ │ │ ├── nlpService.js # Integrates GPT-4/BERT for processing

│ │ │ ├── mcpService.js # Handles MCP request routing

│ │ │ ├── apiService.js # Manages external API calls

│ │ ├── utils/

│ │ │ ├── logger.js # Logging and debugging utilities

│ │ │ ├── config.js # Application configuration

│ │ ├── app.js # Main Express app initialization

│ ├── tests/

│ │ ├── agi.test.js # Unit tests for AGI functionalities

│ ├── package.json # Backend dependencies and scripts

│ ├── .env.example # Sample environment configuration

├── frontend/

│ ├── public/

│ ├── src/

│ │ ├── components/ # Reusable UI components

│ │ ├── views/ # Main pages and dashboard views

│ │ ├── App.js # Frontend entry point (React)

│ ├── package.json # Frontend dependencies

├── docs/

│ ├── architecture.md # In-depth system architecture documentation

│ ├── contributing.md # Contribution guidelines and code of conduct

├── .gitignore

├── README.md # This file

├── LICENSE

Key Points:

- Modular & Scalable:

Each segment of the backend (controllers, models, services) is isolated for clarity and ease of maintenance. - Real-Time Processing:

Integrated logging, testing, and a microservices architecture ensure robust, real-time operations. - Multi-Language Integration:

Python modules handle AI computations, while Node.js/Express provides fast, asynchronous request handling.

- Dynamic Learning:

Processes natural language commands and continuously improves through feedback. - Scalable API:

Exposes endpoints for real-time interactions and automated workflows.

- Content & Social Media Agents:

Automate content generation, social engagement, and SEO optimization. - Professional & Web App Agents:

Optimize user profiles, job applications, and deploy scalable web applications with integrated monetization features.

- Behavioral Gamification:

Incentivizes contributions through rewards, challenges, and rapid feedback. - User-Friendly Interfaces:

Designed to lower barriers for non-developers, making advanced AI accessible to all.

- Node.js 16.x or higher

- Python 3.8 or higher

- Compatible with Windows, macOS, or Linux

- A GitHub account and passion for innovation!

- Clone the Repository:

git clone https://github.com/Techiral/OmniMind.git cd OmniMind - Install Dependencies:

For Python components:

npm install

pip install -r requirements.txt

- Configure Environment Variables:

- Create a

.envfile in the root directory. - Add your API keys and configuration settings (e.g., for GPT-4, MCP servers, LinkedIn, etc.).

- Create a

- Run the Application:

npm start

- Access the Dashboard:

- Open your browser and navigate to http://localhost:3000.

OmniMind is engineered for simplicity and power:

- Input Your Command:

Use our interactive dashboard to describe the task—e.g., “Generate a comprehensive analytics dashboard for my e-commerce store.” - Real-Time Execution:

The AGI Core Engine processes your command, orchestrates the required agents via MCP, and executes the task. - Monitor & Iterate:

View live updates on your dashboard and customize the output as needed. - Extend Functionality:

Easily integrate new modules using our plug-and-play design.

Example Command:

OmniMind --task "Generate an advanced analytics dashboard for my e-commerce store with real-time data and predictive insights"Your expertise is key to shaping the future of OmniMind. We welcome contributions from developers, researchers, and enthusiasts alike.

- Fork the Repository:

git checkout -b feature/your-feature-name

- Develop Your Feature:

Follow our code guidelines and document your changes. - Commit & Push:

git commit -m "Add [feature/bugfix]: description" git push origin feature/your-feature-name - Submit a Pull Request:

Describe your changes in detail and link any relevant issues. - Engage with the Community:

Provide feedback, join discussions, and help us iterate quickly.

Every contribution, from code to documentation, helps build a stronger, more capable AGI for everyone.

We extend our deepest gratitude to:

- Techiral

- The entire open-source community for inspiring and contributing to our vision.

Special thanks to the teams behind MCP servers, LangChain, LangFlow, and all the API providers that make OmniMind possible.

This project is licensed under the MIT License – see the LICENSE file for details.

This README was last updated on March 30, 2025.

Invest your time in OmniMind and help build the first AGI that can truly do anything. Together, we will redefine the future of technology and create a legacy of innovation for the AI community.