Game Creation with XNA/Print version

| This is the print version of Game_Creation_with_XNA You won't see this message or any elements not part of the book's content when you print or preview this page. |

Table of contents

Basics

Game Creation / Game Design

- Introduction

- Types of Games

- Story Writing and Character Development

- Project Management

- Marketing, Making money, Licensing

Mathematics and Physics

- Introduction

- Vectors and Matrices

- Collision Detection

- Ballistics

- Inverse Kinematics

- Character Animation

- Physics Engines

Programming

Audio and Sound

2D Game Development

- Introduction

- Texture

- Sprites

- Finding free Textures and Graphics

- Menu and Help

- Heads-Up-Display (HUD)

3D Game Development

- Introduction

- Primitive Objects

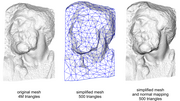

- 3D Modelling Software

- Finding free Models

- Importing Models

- Camera and Lighting

- Shaders and Effects

- Skybox

- Landscape Modelling

- 3D Engines

Networking and Multiplayer

Artificial Intelligence

Kinect

Other

Appendices

References

License

Preface

To start writing games for Microsoft's XBox360, one usually has to read many books, web pages and tutorials. This class project tries to introduce the major subjects, get you started and if needed point you into the right direction for finding additional material.

The idea behind this class project came from a colleague who suggested that most class projects produce really nice results, but usually disappear in some instructors' drawers. After reading on the possibility of using Wikibooks for class projects, we just had to give it a try.

Getting Started

If you are new to Wikibooks, you might first want to look at Using Wikibooks. Details for creating a class project can be found at Class_Project_Guidelines.

Other Wikibooks

There are also other wikibooks on subjects related that are quite useful:

- Creating a Simple 3D Game with XNA

- Video Game Design

- Blender 3D: Noob to Pro

- C Sharp Programming

- Introduction to Software Engineering

- Game Creation with the Unity Game Engine

Other Class Projects

Inspiration from successful class projects can be drawn here, which by themselves are also quite interesting and maybe helpful for this project:

Basics

Introduction

Game development is neither easy nor cheap, instead it is a multi-billion dollar, fast growing industry. It is challenging in terms of hardware and software, always using cutting edge technology.

The XBox 360 contains gaming hardware which is among the most sophisticated available. It has a PowerPC-based CPU with 3 cores running at 3.2 GHz with 2 threads each. For graphics it uses a custom ATI Graphics (Xenos) card running at 500 MHz with 48-way parallel floating-point shader pipelines.

Hence, in game development we have already made the paradigm shift away from the single-core single-threaded application, because in the XBox we are dealing with 6 threads running in parallel on the CPU, with 48 threads running in parallel on the GPU and with hundreds of GFLOPS computing power. Therefore, game programming is parallel programming!

So how can we learn about game development, how can we get started? Microsoft with the XNA Game Studio and the XNA Framework has made it pretty easy to get started. With openly available components, even a 4th semester student can start writing a 3D race car simulation. A very nice feature of the XNA Game Studio is the fact that you can run the programs not only on the Xbox, but also on the PC, which is very nice for development.

Before we can get started writing code, we need to get our environment set up, install necessary software, including Visual Studio, learn a little about C# and the basics of game programming. Also handling of input devices is covered here.

Setup

For this book we will use Visual Studio 2008 and the XNA Framework 3.1. Although there are newer versions available, for many reasons we will stay with this older version.

Preparation

You should first make sure that you have a newer version of Windows, such as XP, Vista or 7, with the appropriate service packs installed. In general, it is a good idea to use the US version of the operating systems. In addition, since at least DirectX 9 compatibility is needed, you may not be able to use a virtual machine (such as Parallels, VMWare or Virtual Box) for doing XNA programming.

Install Visual C# 2008 Express Edition

First download the C# 2008 Express Edition from Microsoft. You can also use the Visual Studio Express version. Installation is straightforward, simply follow the wizard. After installation, make sure you run Visual Studio at least once before proceeding to the next step.

Install the DirectX Runtime

Download and install the 9.0c Redistributable for Software Developers. This step should not be necessary on newer Windows version. First, try to get away without it, if in a later part you get some funny error message related to DirectX, then execute this step.

Install XNA Game Studio 3.1

After having run Visual Studio at least once, you can proceed with the installation of the XNA Game Studio. First, download XNA Game Studio 3.1. Execute the installer and follow the instructions. When asked, allow communications with XBox and with network games.

Test your Installation

To see if our installation was successful, let's create a first project.

- Start Visual C# 2008 Express Edition

- select File->New Project under 'Visual C#->XNA Game Studio 3.1' you should see a 'Platformer Starter Kit (3.1)', click OK to create the project

- to compile the code use either 'Ctrl-B', 'F6' or use 'Build Solution' from the Build menu

- to run the game use 'Ctrl-F5', enjoy

- take a look at the code, among other things, notice that a 'Solution' can have several 'Projects'

Next Steps (optional)

We will only develop games for the PC, if you want to develop games for the XBox also, you need to become a member of XBox LIVE and purchase a subscription (in case your university has a MSDN-AA subscription, membership is included).

Advice

You need to be attentive of which XNA version you have to install.

Compatible versions:

| Visual Studio | XNA Game Studio |

|---|---|

| 2005 | 2.0 |

| 2008 | 3.0, 3.1 |

| 2010 | 4.0 |

Authors

Sarah and Rplano

C-Sharp

When coding for the XBox with the XNA framework, we will be using C-Sharp (C#) as programming language. C-Sharp and Java are quite similar, so if you know one, basically you know the other. A good introduction to C-Sharp is the Wikibook C_Sharp_Programming.

C# has some features that are not available in Java, however, if you know C++ some may look familiar to you:

- properties

- enumerations

- boxing and unboxing

- operator overloading

- user-defined conversion (casting)

- structs

- read-only fields

The biggest difference between C-Sharp and Java probably are the delegates. They are used for events, callbacks and for threading. Simply put, delegates are function pointers.

Properties

This is an easy way to provide getter and setter methods for variables. It has no equivalent in Java, except if you consider the automatic feature of Eclipse to add these methods. Simply consider the following example, notice the use of the value keyword.

Enumerations

In Java you can use interfaces to store constants. In C# the enumeration type is used for this. Notice that it may only contain integral data types.

Boxing and Unboxing

This corresponds to Java’s wrapper types and also is available now in Java. Interesting to notice is that the original and boxed are not the same. Also notice that unboxed stuff lives on the stack, whereas the boxed stuff lives in the heap.

Operator Overloading

This is a feature that you may know from C++, or you might consider the overloading of the ’+’ operator for the Java String class. In C# you can overload the following operators:

- unary: +, -, !, +, ~, ++, --, true, false

- binary: +, -, *, /, %, &, |, ^, <<, >>, ==, !=, <, >, <=, >=

For instance for vector and matrix data types it makes sense to overload the '+', '-' and the '*' operators.

User-Defined Conversion

Java has built-in casting, so does C#. In addition, C# allows for implicit and explicit casting, which means you define the casting behavior. Usually this makes sense between cousins in a class hierarchy. However, there is a restriction: conversions already defined by the class hierarchy cannot be overridden.

Structs

Structs basically allow you to define objects that behave like primitive data types. Different from objects, which are stored on the heap, structs are actually stored on the stack. Structs are very similar to classes, they can have fields, methods, constructors, properties, events, operators, conversions and indexers. They can also implement interfaces. However, there are some differences:

- structs may not inherit from classes or other structs

- they have no destructor methods

- structs are passed by-value not by-reference

Read-Only Fields

When we were discussing the keyword const the difference to Java’s final was that you had to give a value to it at variable declaration time. A way around this is the readonly keyword. However it still has the restriction, that a readonly variable has to be initialized inside the constructor.

Delegates

Usually, in Java when you pass something to a method, it is a variable or an object. Now in C# it is also possible to pass methods. This is what delegates are all about. Note that delegates are also classes. One good way of understanding delegates is by thinking of a delegate as something that gives a name to a method signature.

In addition to normal delegates there are also multicast delegates. If a delegate has return type void, it can also become a multicast delegate. So if a delegate is the call to one method, then a multicast delegate is the call to several methods, one after the other.

Callbacks

Callback methods are used quite often when programming C or C++ and they are extremely useful. The idea is instead of waiting on another thread to finish, we just give that thread a callback method, that it can call once its done. This is very important when there are tasks that would take a long time, but we want the user in the meanwhile to do other things. To accomplish this, C# uses delegates.

Inheritance

Object-oriented concepts in C# are very similar to Java’s. There is a few minor syntax related differences. Only with regard to method overwriting in an inheritance chain, C# provide more flexibility than Java. It allows for a very fine-grained control over which polymorphic method actually will be called. For this it uses the keywords 'virtual', 'new', and 'override'. In the base class you need to declare the method that you want to override as virtual. Now in the derived class you have the choice between declaring the function 'virtual', 'new', or 'override'.

Game Loop

Programming a game consoles (GC) is not quite the same as programming a regular PC. Whereas PC's have sophisticated operating systems such as Windows, Linux or Mac OS, on a game console we are much closer to the hardware. This has to do withe the special requirements of games. We must consider the following differences between PC’s and GC's:

- on a GC usually only one (multithreaded) program is running, thus there is no real OS

- on a GC raw graphics power is needed, but there is no GUI with windows and widgets

- a GC usually has no keyboard, console, sometimes not even a harddisk

Hence, you will find no classes with names like Window, Form, Button or TextBox. Instead you find classes with names such as Sprite, Texture2D and Vector3. We talk about Content Pipeline, Textures and Shaders.

Usually, programs for PC's are event driven, meaning the user clicks somewhere something happens. If the user doesn't click anywhere, nothing happens. On game consoles (GC) this is a little different. Here we often find the so-called Game Loop. For the Xbox 360, or rather the XNA framework, it consists of three methods:

- LoadContent()

- Update( GameTime time )

- Draw( GameTime time )

LoadContent() is called once at the start of the game to load images, sounds, textures, etc. Update() is used for getting user input, updating the game state, handling AI and sound effects. Draw() is called for displaying the game. (MVC Pattern). The Game Loop then consists of the two methods Update() and Draw() being called by the engine. They are not neccessarily called in sequence!

Input Devices

Introduction

Input Devices is one of the most important chapters in a handbook for game creation. A computer (or Xbox) game subsists on interaction with the user - that is why there needs be to a method to check the user input and to let game react on this input.

XNA makes it very easy to control the user devices. It offers an easy-to-use and understandable API for access to mouse, keyboard and gamepad. Using this it is possible to write an user-interaction scheme in a short time. Basically XNA offers easy access to:

- Mouse

- Keyboard

- Gamepad

The basic concept is the same for all controller types. XNA provides a set of static classes (one for each type) which can be used to retrieve the status and all properties (e.g. pressed buttons, movements, ...) of the input device.

This detection is usually located in the Update()-method of the game loop to retrieve the status as often as possible. Storing the states of all input devices in class variables allow it to check the status in other methods and classes. It is a common solution to have an array of boolean variables in the class which represent the status of all controllers - namely the pressed buttons on the controller, the mouse movements and clicks and the pressed keys on the keyboard.

protected override void Update(GameTime gameTime)

{

KeyboardState kbState = Keyboard.GetState();

// ...

}

Windows vs. Xbox

Windows and Xbox games are usually played in a different way. In general a Windows computer is controlled by a mouse and a keyboard, whereas an Xbox is often controlled by a gamepad. Therefore it needs a control structure to decide whether the code is executed on Windows or Xbox to set a default controller for the game.

#if XBOX

// this code is embedded only in xbox project

#endif

But it is also possible to connect a mouse or keyboard to an Xbox as well as to connect an Xbox controller to a Windows computer. So in most of the cases it is better to check for example if a gamepad is connected. Another way of dealing with that problem is to store the user's controller of choice in a variable. So the user may decide which controller he likes to use to play your game.

Mouse

At first you have to get an instance of the mouse state by calling the static GetState()-method of the Mouse class. This object now gives you access to a lot of public attributes from the connected mouse.

MouseState mouse = Mouse.GetState();

bool leftButton = (mouse.LeftButton == ButtonState.Pressed); // left mouse button

bool middleButton = (mouse.MiddleButton == ButtonState.Pressed); // middle mouse button

bool rightButton = (mouse.RightButton == ButtonState.Pressed); // right mouse button

int x = mouse.X; // horizontal mouse position

int y = mouse.Y; // vertical mouse position

int scroll = mouse.ScrollWheelValue; // scroll wheel value

The state of the mouse buttons is read through the attribute "xxxButton" (where xxx stands for the type - left, middle, right). If you compare this value with ButtonState.Pressed or ButtonState.Released you can retrieve the state of this button. In the example above it stores the state of each button in a boolean variable that is true if the associated button is pressed.

The mouse position on the screen is stored in the X and Y attribute of the mouse object. This value is always positive (as it starts with 0,0 in left upper corner) and may be compared to further mouse positions (in a game logic) to detect a specific movement of the mouse. A simple example would be:

MouseState mouse = Mouse.GetState();

int x = mouse.X;

int y = mouse.Y;

deltaX = oldX - x; // difference of horizontal positions

deltaY = oldY - y; // difference of vertical positions

oldX = x;

oldY = y;

Most of the modern mouse also have a scroll wheel that is often used in games, for example to zoom, to scroll or to switch between different weapons. The attribute ScrollWheelValue is an integer that represents the scroll state of the mouse.

To recognize the movement of the scroll wheel it is necessary to store some older values and compare them with each other. The sign of this difference indicates the scroll direction and the absolute value indicates the speed of scroll movement.

Keyboard

To check the state of the keys on a keyboard is very simple. At first you have to get an KeyboardState object by calling the static method GetState from the Keyboard class. This instance now lets you retrieve the state of specific keys.

KeyboardState keyboard = Keyboard.GetState();

bool keyB = keyboard.IsKeyDown(Keys.B); // key "B" on keyboard

bool keyArrowLeft = keyboard.IsKeyDown(Keys.Left); // arrow left on keyboard

The boolean variables keyB and keyArrowLeft now store "true" if the specific key is pressed right now or "false" if it is not pressed. This method can be repeated for each key that is of interest for the application or game.

It is also possible to directly get an array of all keys of the keyboard that are currently pressed. A call of the method GetPressedKeys returns an array of Keys that can be traversed key by key.

KeyboardState keyboard = Keyboard.GetState();

Keys[] keys = keyboard.GetPressedKeys(); // array of keys

Gamepad

The gamepad is the most convenient way to play a game on the Xbox. Despite XNA is designed to develop games for Windows as well as for Xbox, the default API only supports the original Xbox controller. Based on that fact you have to decide whether you want to force your user to use (and maybe buy) the Xbox gamepad or if you want to support any other gamepads for example from Logitech.

That might be more comfortable for the user, though it means more coding effort for the developer. In this chapter I want to describe the implementation for both the Xbox controller and all other controllers.

Xbox Gamepad

Accessing this input device is nearly as easy as checking the state of mouse or keyboard. One (and important) difference is that XNA makes it able to connect up to four different gamepads to the Xbox or Windows computer.

So it is (often) necessary to implement a loop over all gamepads that are connected to check their states individually. How this (and more) can be done is explained in the following paragraphs.

GamePadState[] gamePad = new GamePadState[4];

for(int i = 0; i < 4; i++) { // loop over up to 4 gamepads

gamePad[i] = GamePad.GetState(i); // get state of gamepad

if(gamePad[i].IsConnected) {

// gamepad is connected

}

}

In this loop you can access all attributes like the buttons (front and shoulder), the digital pad and the two analog sticks. Here is how you do it:

bool aButton = (gamePad[0].Buttons.A == ButtonState.Pressed); // button A

bool leftDigital = (gamePad[0].DPad.Right == ButtonState.Pressed); // left button on digital pad

int leftStick = gamePad[0].ThumbSticks.Left.X; // horizontal position of left stick

The rumble effect lets the gamepad vibrate and gives the player a special feedback to his actions in the game. For instance a hit by an opponent in a shooter game or a crash in a racing game could cause such feedback. The second and third parameter control the intensity of the rumbling effect.

GamePad.SetVibration(int controllerNr, float leftRumble, float rightRumble); // make the controller rumble

Other Gamepads

Other gamepads than the original Xbox controller are not supported by XNA. But it is possible to integrate a support for them with a free library which is called SlimDX.

In addition you need a helper class that can be found here - it uses SlimDX to check the gamepad state of controllers that are not the original Xbox controller.

If you have downloaded, installed and integrated both the SlimDX library and the helper class you can use the following code to check the gamepad states - like you have done with the Xbox controller in XNA.

controller = new GameController(this, 0); // number of gamepad

GameControllerState state = controller.GetState();

bool button1 = state.GetButtons()[1]; // button 1 pressed

Kinect

Kinect is a revolutionary video camera for the Xbox that recognizes your movement in front of the television. This can be used to control games just with your body. Developers can use the Kinect framework to integrate this into their game.

Game Creation / Game Design

Introduction

Here we first consider what types of games there are, basics behind story writing and character development. Also project management, marketing, making money, and licensing are issues briefly touched upon.

More Details

Lore ipsum ...

Types of Games

BlaBla about what kind of games are out there, maybe some history. Also include non-computer games, maybe there are some genres.

- role playing

- card games

- chess, go

- browser games / 2nd Life...

- Nintendo

- Playstation/XBox etc

- 2D

- 3D

- strategy

... Write a little chapter about each, giving examples and references, maybe with links where to play them online.

Authors

Story Writing and Character Development

A good game lives and dies with it`s characters and it`s story. A good story is what catches the player, keeps him interested and makes him want to continue. The story is the frame for all the action which is taking place, wrapping everything together. But story alone will never keep the player going. There is no good story without good characters and vice versa. The characters in the story are just as important. Not only the main character, but all characters he is interacting with, all characters who motivate him or influence him to do the things he does. Therefore its most crucial, for a good story, to create the story and all characters within in a way so they form a coherent unity. Imagine a Spacetrooper crossing Frodos way in The Lord of the Rings. That simply would`nt fit and would definitely ruin the story. But what exactly is a good story? And what exactly are good characters, fitting this very story? As always, whether or not a story and its genre are interesting, is a question of taste and lies in the eye of the beholder. Whereas whether a story is written in a good or a bad way, follows certain mechanics. Same applies for the characters in the story. Its personal taste whether you like the good guy or prefer the bad guy. But creating a character which is “self-contained” and well made, again follows certain mechanics.

However you write a story or create your character, is totally up to you. But looking at what other authors and game developers do, makes it easier. There are certain tools and ways how to write the story and create the character for your game. The more detail you want to put in the more research you should do. There are many books out there that might help you to dive deeper int o the matter of Story Writing and Character Development. Covering it all would simply be to much. This article will give you a basic insight into Character Development and Story Writing for Games.

Character Development

On the following pages I will describe techniques to develop/create a character for a game or a story. Character development in terms of progress while playing, gaining experience, increasing level, learning skills and so on, is not part of this article, will be referred to by certain links though. Focus of this article is character creation prior to the game.

Preliminary Work

The probably most important thing when creating a character is to know its purpose. Are you creating the main character of the story, the villain, a sidekick, a servant, a random companion or something else? Knowing the role of the character makes it easier to define his behaviour, his actions, his way of thinking and his overall appearance. After you have chosen the scope of your character, the actual work begins. Inform yourself! Read as much about the type of the character as you can. Ask yourself questions to define the character.

- Do characters like this already exist in other games or stories?

- What has been written by other authors?

- Are there already stereotypes of this character and do they fit to your creation?

- Is he a servant? How does it feel to serve?

- Is he a soldier? How does it feel to be in battle?

- Is he a priest? How does it feel to pray to god?

Learn as much about the character as you can. Check all available resources. Talk to friends. Keep asking questions. If you don't find exactly what your looking for, stick to your own imagination and feelings. In the end it`s your creation. There are certain things to consider though. Do you create a character who is part of an already existing universe (like an orc or a dwarf or a human)? If so think about the characteristics already applied to them. Like, orcs are green, dwarfs a small and humans can’t breath underwater. Do you want to stick to these basic characteristics that are already present in the players imagination, or do you want to create something totally new? However you decide, keep in mind how the player could react on your creation.

Point of View and Background

In order to make your character authentic, try to look through his eyes. Try to be your character and keep your eyes open to the world and how the character perceives it. How do things look? Why do they look like this? How do things feel? Why do they feel that way? What feels good? Why does it feel good? The WHY of things sometimes is more important than the things themselves. To understand the WHY, it is necessary to understand the background of your character.

A real person develops a certain understanding of the world and has an individual point of view on things, depending on his own experience, on his way of growing up and on all the things that happened in his life. And probably only he can tell how he became the person he is today. Since your character is a creation of your fantasy, you are the only one that can tell how he became the character he is. The more specific you describe the characters background the easier it will be for the player to understand him and feel with him. The player must not necessarily agree with the characters attitude, but he will more likely understand it, if you provide a detailed explanation for his behaviour.

The more you think in detail the more realistic your character will be. You are the one to decide how much detail your character needs. But in general the main characters in your game should posses more detail and depth, than any character in a supporting role.

Motivation & Alignment

Understanding the WHY of things is a good start to understand the motivation behind decisions your character makes. Motivation is the force that drives all of your characters, may it be good or evil. What is the motivation of the plumber Mario to take all the efforts? To rescue the princess and stop Bowser. What is Bowser`s motivation? To take over the Mushroom Kingdom. Both of them are driven by their motivation.

To understand the motivation of a character, and eventually agree with it, you need to know as much about the character as possible. Giving your character an alignment will help to understand his actions and might even help to understand and clarify his motivation.

SuperMario wants to save the princess, never does any bad things and therefore is easily classified as good. So is Bowser. He embodies everything which is considered bad, so he is the bad guy, period.

But saying “Well, he is a bad guy, and that’s why he is doing bad things.” won’t do the trick for more detailed characters. The more detailed a character gets, the more complicated it is to classify him as good or evil. Some people do the right things for the wrong reasons and some do the wrong things for the right reasons. Who is the good and who is the bad guy?

To help you align your character to a side, you should look at his intention. When he is doing a good thing and furthermore intended to do a good thing, he is probably a good guy. But no one is entirely good or purely evil. Most characters are neutral until their actions prove them to be good or evil. Here is a list of the three stereotypes and their attributes to help you classify your character:

Good

- does the right things for the right reasons

- loves and respects life in every form

- tries to help others

- puts others interests over its own

- sticks to the law

- is driven by the wish to do the right thing even when not knowing what the right thing is

Be careful when creating your Hero. There would be no fun running through the game being invincible, being too strong or being too clever. If things are too easy, players will loose interest very fast. To make him interesting a hero must not be perfect. He should have some weaknesses and flaws the player can identify with. Most heros don’t even know they are heros. They can be just like you and me, living their lives and doing their daily work. Suddenly something happens and they simply react. Driven by their inner perception of what is right and wrong, driven by their alignment, they react in a way which slowly transforms them into what we would call a hero. Frodo for example never chose to be a hero, he was chosen and became a hero while fulfilling the task he was given.

A hero needs to grow with his challenges. And exactly that is what makes the hero so interesting for the player. The change that happens and the fact that the player witnesses the transformation from the normal guy to the saviour of the world. While creating your character, keep in mind that every hero has skills and talents that enable him to fulfill his task. Some of them are special or even unique which make the hero appear special. But what really makes the hero interesting and appealing to the player are his flaws and merits. A knight in shiny armor, a huge sword and a big shield who slays dragons seems impressive and adorable. But giving him flaws and merits like, being afraid of small spiders make him much more realistic and bring him closer to the player.

Neutral

- sometimes does the right things

- sometimes behaves selfish and does the wrong things

- hard to say on which side they are - sometimes they don't know themselves

- even though they call themselves neutral their actions sometimes prove otherwise

- good alignment for sidekicks of hero and villain - Devils advocate

- Anti-Heros can be neutral and pushed from one side to the other

Neutral characters don't choose a side per se. They base their decisions and actions on their mood at a specific point. Most characters are neutral until they have to decide which way to go. And after that decision, they can still change their mind again. Whatever suits them best.

Evil

- is selfish

- greedy, insane or pure evil

- shows no interest for others

- puts his own goals infront of everything else

- must have a strong motive

- the reader must love to hate him simply cuz he embodies everything we hate or never would consider doing

The bad guy is motivation number one for every hero. Either he threatens peace and harmony, or he kidnaps the princess, or he wants to destroy the world or whatever. He actually makes the hero become a hero. The villain does not always think of himself as bad person, he is convinced that what he is doing is right (in his world) and the hero, in his eyes, is the bad guy trying to ruin everything. Point of view is very important.

Sometimes the bad guy isn't bad because he chose to be, but rather was forced to, by complicated circumstances. Whether or not you tell the player, is totally up to you. There are different ways to approach the creation of the bad guy. Either you say he is a bad guy and does all the things bad guys do. In that case the player probably won’t build up a closer relation to the bad guy. He is just the one that needs to be whiped out in order to restore peace and harmony. Or you create your bad guy more sophisticated. Disguise him in a way so the player does not recognize him as the bad guy from the beginning. Or you give him some features that make him appear nice in a certain way. For example he loves his dog and would do everything for him. Or you create an inner conflict which throws him from side to side. Something that might let the player feel with the bad guy. Keep in mind that the bad character should also have skills, talents, flaws and merits to make him as realistic as possible.

For more detailed information about archetypes, their features and use in stories check Archetypes.

Resumé Character Development

At the end of the day it’s your character. You alone decide how he will look, how he will behave and how he will react. And because you spend many hours thinking about how your character should be like, it is important to take care about the player understanding your intention. The more detail a character gets, the more interesting he will be. The more interesting a character is, the more the player will like him. The more the player likes your character the more he will enjoy the game.It is pretty easy to overload a character with all features fitting a single alignment. Make the bad guy the most evil creature you can imagine, or make your hero the shiniest of all white knights. But the more you mix up your characters the more realistic he will be. However you decide and whatever character you create, make sure it fits your story and your purpose.

Story Writing / Story Telling

Writing a story often begins with an idea. Where that idea comes from may differ though. Either you want to make a game out of a movie or a book you like, you want to create something totally new, or you want to make a sequel of an existing game. Depending on the source of your idea, different things are to be considered when writing. In general one can say, writing a story and creating a game should go hand in hand. It’s never a good idea to clamp a story to an already existing game design and vice versa. Both, Design and Story grow and therefore it’s a good idea to make them grow parallel.

Adapting a movie or a book

When adapting a movie or a book you necessarily need to take things from the movie. Either the story, the main characters, the setting or all of it. Otherwise it wouldn't be an adaptation. If you want to adapt the whole movie you need to be clear of certain things. Yo need to stay true to the original material. When doing so you need to be aware of that not everything that is working good in a movie works for a game. Some parts of the story are moving on without the character even being present. You have to fill in that information with cutscenes or videos which take the player out of the game. That’s no big deal in a movie because you sit and watch it anyway and don’t interact with it. It can be frustrating for a player though, to not be able to interact in a specific situation. Another point to think about is the fact that the player might know the end of the game already due to the fact he knows the movie. This could take away some thrill but on the other hand could make the player identify with the hero because he is doing all the things the hero does in the movie.

Creating a sequel

Main reason for creating a sequel of a game is the success the original game already had. There is an existing market with fans and maybe even working merchandise. Furthermore there already is a name, so that sequels of games mostly start with a little bonus on their side. The flip side of sequels are the expectations the fans have. The new title has to be bigger, faster, better… simply more. Often better graphics, bigger explosions, better sound and cooler style are not enough to guarantee the success of a sequel. There are certain ways to approach a sequel. First one is to simply take what was working in the last part, rehash it a bit, don’t add new features but just continue the story. That’s no guarantee for failure, but certainly leads in that direction. A better way to do it is to look for the key features of the original game, polish them up, place them in a new setting and create a game which relates to the original but can also stay on its own. The good thing is that you don’t have to start from scratch, but can use much of the work already done for the original. So you have more time to focus on the details which where neglected or not even thought of in the original. Focus on the main character, give him more depth, add to his story. Take some of his abilities and think of new ways to use them. Improve or worsen them. A big bonus is that you already know what exactly was good about the original and what was bad. Take the good things, add more of them and whipe out the bad stuff. But just taking and polishing up what was there before will not be enough. Add more content by adding more story and detail to your characters. Add more features but don’t overload the game.

Creating a whole new story

When creating a whole new story you are quite free to do whatever you want. Keep in mind certain things though. If you want to create a game which shall be successful and should sell good you need to know what kind of games are played at the moment and why. You should consider how the players think and what they want. Next thing to consider is what kind of story do you want and then choose an appropriate game-style to match your story. Decide for a genre (Game Genres / Types of Games). Not all genres are able to transport the story of your game. Already existing genres might have content which serve your needs and are already established. On the other hand genres do have boundaries which are not easy to cross. Whatever genre and style you choose for your game, stick to it throughout the whole game.

How to actually write a story

Every story needs a title, a prologue, a main part and an epilogue. Furthermore a story needs characters, because no story without character and no character without story. This seems a bit flat but that’s all there is to it. Lets dive a bit deeper into the single parts.

Title

The title should fit your story. It should create an interest to play the game. It should partially reveal what the game is about but not say to much to keep the thrill.

Prologue

The prologue usually starts with a description of the game world as it is. The player becomes a first impression and a feeling for the setting. A good prologue rouses the players desire to explore. Actually everything is in order. Furthermore the prologue gives background details needed to understand what is going on.

Main part

The main part usually starts with a call to adventure or a reason to start playing. Whatever that my be in your story. Either the princess gets kidnapped, your character’s village gets destroyed by Dark Riders, or your character simply wants to break out of his world. Referring to Joseph Campbell's Monomyth the hero refuses this first call to adventure and needs further persuasion to finally start his journey. But as for games the player wants to play, he wants to explore and wants to take the journey. That’s why he plays the game. So the call of adventure gets our character going. On this journey the character is faced with multiple challenges he has to overcome in order to come a step closer to his final goal. (Whatever that is…). With every challenge the character passes he will grow stronger and will come closer to his goal. But every challenge which is overcome, is followed by an even greater challenge. Lee Sheldon writes in his book Character Development and Story Telling for Games:

- “We have our crisis then. A major change is going to occur. Only one? No. As we move through the story, crisis follows crisis, each one escalating tension and suspense.

- Every one of these crises needs an additional element a climax. Egri says, “crisis and climax follow each other, the last one always on a higher plane than the one before…

- “We have our crisis then. A major change is going to occur. Only one? No. As we move through the story, crisis follows crisis, each one escalating tension and suspense.

- …Resolution is simply the outcome of the climax that is a result of the crisis. The story is built from this three-step dance. Every one of these crises has reached a

- climax and has been resolved, only to have the stakes raised higher, and the next crisis always looming as even more profound.“

- …Resolution is simply the outcome of the climax that is a result of the crisis. The story is built from this three-step dance. Every one of these crises has reached a

But challenges should not always be slaying evil creatures or escape from a trap. Personal sacrifice, or the loss of a loved companion can be a challenge as well. Most of you might remember Gandalf falling to his assumed death in the Mines of Moria while fighting the Balrog. But Frodo and his fellowship decided to keep their eyes on the goal, grow with the challenge and move on. Challenges can also be to collect certain things, learn how craft or solve puzzles. And each challenge has a small reward, may it be experience, a new weapon, a new companion or just something that makes your character stronger and prepares him for his “final battle”. Small challenges or quests keep the player motivated. Furthermore the character should meet several other characters. All of them will have their own intend and influence on him. Some want to help him advance on his journey and some of them want to hinder or even destroy him.

Usually the main part ends with the final encounter and the ultimate reward. May it be the Lord Deamon you slay, the princess you rescue or the world you save. Again referring to Joseph Campbell's Monomyth this is attended by a personal sacrifice your character has to make. The hero is willing to give away his life to save the princess and to complete his task.

Epilogue

The epilogue describes how the character receives the ultimate Boon, his way home and how the story ends. Sometimes games leave an open end in order to be continued some day. Some games like MMORPG do not even have a “real” end. The story itself may end or pause until the next expansion is released, but the game continues.

Author

Thonka

Links

FullCircle

Wikipedia : The Lord of the Rings

Wikipedia : Archetypes

Wikipedia : Game Genres

Wikipedia : Prologue

Wikipedia : Joseph Campbell

Wikipedia : Monomyth

Wikipedia : Lee Sheldon

Wikipedia : Epilogue

Wikipedia : MMORPG

Wikibook : Game Creation with XNA - Types of Games

Books

Character Development And Storytelling For Games by Lee Sheldon(Premier Press 2004)

Die Heldenreise im Film by Joachim Hammann (Zweitauseneins)

Project Managment

BlaBla about project management and how important it is. Should include basics of project management, including milestones, risk analysis, etc. Especially, also tools like MS Project, Zoho, Google Groups or similar should be compared and described how to use them.

Authors

to be continued... thonka

- also interested: juliusse

Introduction

After finishing to develop your Xbox game, your aim will be to make as many people as possible to buy and enjoy your game to get at least the money back which you have invested into the game and at best some reward. Microsoft itself offers a platform for downloading games which can be used to distribute games - it contains two sections, where independent developers can submit their creations. This Book gives information about the whole platform, the special independent developers sections, describe the ways how to publish a game successfully and provides some informations how Microsoft generally promotes the Xbox to attract more users.

Xbox Games + Marketplace

General

The Xbox Marketplace is a platform, where users can purchase games, download videos, game demos, Indie Games (will be treated in a separate chapter) and some additional content like mappacks or themes for the XBox 360 Dashboard. It was launched in November 2005 for Xbox and 3 years later, in November 2008, for Windows OS. Since 11th August 2009 it's possible to download Xbox 360 Games. The content will be saved on the Xbox 360's hard drive or an additional memory unit.

Payment

The Xbox Marketplace has it's own currency: "Microsoft Points". So users can purchase content without a credit card and credit card transaction fees can be avoided for Microsoft.[1] Microsoft Points are offered in packages of different quantities, from 100 up to 5000 while 80 points are worth US$1 [2] and can be purchased with credit card or Microsoft Point Cards in retail locations and since May 2011 by PayPal in supported regions. Some points of criticism are that users have to buy usually more points then they actually need and that they obscure the true costs of the content:

"To buy even a single 99-cent song from the Zune store, you have to purchase blocks of “points” from Microsoft, in increments of at least $5. You can’t just click and have the 99 cents deducted from a credit card, as you can with iTunes. [..] So, even if you are buying only one song, you have to allow Microsoft, one of the world’s richest companies, to hold on to at least $4.01 of your money until you buy another." [3]

"Microsoft is obscuring the true cost of this content. A song on Zune typically costs 79 Microsoft Points, which, yes, is about 99 cents. But it seems to be less because it's just 79 Points." [4]

These statements are from Zune reviews, a platform to stream and download music and movies, also with Xbox 360, similar to iTunes. Microsoft Points are the currency of Zune, too and the points can be transfered between Xbox Live Marketplace and Zune accounts.

Xbox Live Arcade

General

Xbox Live Arcade was launched on 3rd November 2004 for the original Xbox. It's a section of the Xbox Marketplace which accepts games from a wide variety of sources, including indie developers, medium-sized companies and large established publishers who develop simple pick-up-and-play games for casual gamers, for example "Solitaire" or "Bejeweled". [5] It starts with 27 arcade games on the beginning, now there are about 400 games available. In November 2005, Xbox Live Arcade was relaunched on the Xbox 360. It now has an fully integrated Dashboard, every arcade title has a leaderboard and 200 Achievement points.

Publishing an Arcade Game

Publishing an Arcade Game can cost a few hundreds of dollars and takes about 4-6 month of time to develop an test for a small team. They have to work closely with the Xbox Live Arcade team on everything from game design and testing to ratings, localization and certification. If everything is finished, the Xbox Live Arcade team puts the game onto Xbox Live. The whole process can be broken down in some steps: [6]

- Contact - Write an email to the Arcade team with the concept, if they are interested they will send some forms to fill in.

- Submission - Submit the game concept formally with as much information as possible about design, documents, screenshots and prototypes to be discussed in the Arcade portfolio review.

- Create - After a positive review the developing can start. Tools especially for Arcade game developing are available (for e.g. Achievements and Leaderboards). An Arcade team producer get assigned to work with the developer for working on design, Gamescore and Achievements and a schedule with milestones for showing process to the Arcade team.

- Full test - Final test with debugging and verification, then the regular Xbox 360 certification to be signed.

- Publishing - The game is available at the Arcade Game Marketplace now.

Xbox Live Indie Games

General

Xbox Live Indie Games is a category in the Xbox Marketplace for games from independent developers with Microsoft XNA. The difference to the Xbox Live Arcade Games is, that Indie Games are just tested by the community, has much lower costs of production and they are often very cheap. Currently there are were submitted about 1900 Indie Games since the release at 19th November 2008.[7]

Publish an Indie Game

Before starting to develop an Indie Game, some restrictions should be noted:[8]

- The binary distribution package must be no larger than 150 MB and should be compiled as single binary package.

- The games are priced at 200, 400 and 800 Microsoft Points, games that are larger then 50 MB must be priced at least 400 Microsoft Points.

- Each game needs an eight minute trial period to offer a testing time for users. After the trial time they can decide whether they want to buy this game or not.

- Xbox Live Indie Games have not the same features as the Xbox Live Arcade Games. There are no Achievements or leaderboards available, but they include multiplayer support, game invitation, game informations, Xbox Live Avatars and Party Chat.

- AppHub membership is required

The publishing itself is also a process, but much less complex then for Xbox Live Arcade Games:[9][10]

- Create - Develop the game in C# using the XNA Game Studio framework, to allow the developers to debug and test their game internally before release.

- Submission - Uploading the package at the App Hub website, add some metadata, specify costs and design the Marketplace offer.

- Playtest - Other developers of the App Hub community can test the game for one week to give some feedback.

- Peer Review - Developers check the game for unacceptable content, instability or other things which could block the publishing. Multile reviews are needed to pass the peer review successfully. If a game was declined, it can be resubmitted if the feedback has been used.

- Release - If the peer review was successful, the game is available in the Marketplace Indie games section. The developer now gets 70% of the profit, Mircosoft 30% (in US$!).

AppHub

AppHub is a specific website and community for Xbox Live Indie Games (and Windows Phone) developers. AppHub offers free tools like XNA Game Studio and DirectX Software Development Kit, provides community forums where users can ask questions, give advice, or just discuss the finer points of programming. Code samples provides developers with a jump-start to implementing new features, and the Education Catalog is packed with articles, tutorials, and utilities to help beginners and experts alike. An App Hub annual subscription for $99 USD provides you with access to the Xbox LIVE Marketplace, where you can sell or give away your creation to a global audience. For students the membership is free if you register at MSDNAA. They also provide a developer dashboard so developers can manage all aspects of how the game appears in marketplace, monitor downloads, and track how much money they've earned. So the AppHub membership is required to publish an Indie Game. Per year, members can submit up to 10 Indie Games, peer review new Indie Games before they get released and get offered premium deal from partners.

Xbox Marketing Strategies

53 million Xbox Consoles have been sold world wide, the Xbox Live community has more than 30 million members and it's getting harder for Microsoft to attract new customer. So they try to gain user from a new target audience and develop some new strategies to get the Xbox into as many homes as possible. Microsoft uses a lot of viral marketing and tries to let users to interact as much as possible in their own Xbox Live community.

Xbox Party

The usual Xbox gamer is male, so there are a lot of women who can be won as new customers. Inspired by "Tupperware Parties", Microsoft offers the possibility to get an Xbox pack to throw a home party to present the Xbox. Hosts got an Xbox party pack of freebies that included microwaveable popcorn, Xbox trivia game "Scene It? Box Office Smash," an Xbox universal media remote control, a three-month subscription to Xbox Live, and 1600 Microsoft Points. The aim is to spread the Xbox and get into a new target audience, everyone wants to have the console all friends are on.[11]

Special offers

Another strategy is to reach even the last ones of the main target audience who haven't an Xbox yet. A main reason are the costs of an Xbox, a special offer for college students now offers an Xbox 360 to all U.S. college students who buy a Windows 7 PC. By targeting college kids, Microsoft is going after the sexiest demographic. College students ages 18 to 24 spend more than 200 billion dollars a year on consumables. The average student has about $600 a month in disposable income from part-time work, work-study or scholarships. They also typically don’t have mortgages or car payment. Because of this, they are able to spend their money less conservatively than an adult who has those expenses on top of paying back college loans and possibly providing for their families. [12]

To promote the marketplace and connect the users of Windows Phones and Xbox closer to each other, Microsoft offers a free Xbox 360 game to developers of Windows Phone Apps, the best App also wins a Windows 7 Phone. It's just available for the first 100 Apps and calles Yalla App-a-thron comepetition.[13]

Promote Indie Game

Indie Games are developed usually by independent developers with low costs. The best strategy to advertise for an Indie Game is spreading it as much as possible. Users can rate games in the Marketplace, games with a good rating get downloaded more often. If someone plays an Indie Game, friend in the Xbox Live are able to see that and maybe the game gets spread more and more into the community. Websites like IndieGames.com constantly present popular Indie Games, the aim of every developer should be to get as much attention as possible and to trust into viral marketing.

Weblinks

- Xbox Marketplace

- App Hub

- Wikipedia: Xbox Live Marketplace

- Wikipedia: Microsoft Points

- Wikipedia: Xbox Live Arcade

- Wikipedia: Xbox Live Indie Games

References

- ↑ http://www.1up.com/news/xbl-wtf-pay-exact-change

- ↑ http://thewrongadvices.com/2007/03/05/xbox-live-points-converter/

- ↑ http://ptech.allthingsd.com/20061109/zune-challenges-ipod/

- ↑ http://www.winsupersite.com/reviews/zune.asp

- ↑ http://money.cnn.com/2004/10/13/commentary/game_over/column_gaming/index.htm

- ↑ http://xboxlive.ign.com/articles/721/721843p2.html

- ↑ http://marketplace.xbox.com/en-US/Games/XboxIndieGames

- ↑ http://create.msdn.com/en-US/home/faq

- ↑ http://www.gamasutra.com/view/feature/3545/sponsored_feature_democratizing_.php

- ↑ http://www.gamasutra.com/view/feature/3840/sponsored_feature_xbox_live_.php

- ↑ http://www.businessinsider.com/2009/2/microsoft-throwing-xbox-360-tupperware-parties-to-hook-women-brilliant-msft

- ↑ http://smallbusiness.uprinting.com/product-marketing-strategy-microsoft-offering-students-free-xbox-360/

- ↑ http://blog.yallaapps.com/2011/05/18/yalla-app-a-thon-%E2%80%93-publish-an-app-and-get-an-xbox-360%C2%AE-game/

Mathematics and Physics

Introduction

Unfortunately, every good game, especially the 3D kind, needs a basic knowledge of vectors and matrices. Also collision detection, especially when dealing with thousands of objects requires special data structures. Ballistics and Inverse Kinematics are also topics covered here, as well as character animation. Last but not least, a couple of physics engines are introduced.

More Details

Lore ipsum ...

Vectors and Matrices

We need to recall some basic facts about vector and matrix algebra, especially when trying to develop 3D games. A nice introduction with XNA examples can be found in the book by Cawood and McGee. [1]

Right Triangle

Once upon a time there was little Hypotenuse. He had two cousins: the Opposite and his sister the Adjacent. Both were usually just known by their nick names 'Sine'[2] and 'Cosine'[3]. They lived together in a right triangle close to the woods. They were related through his mother's sister, aunty Alpha. His father, who was a mathematician, used to say that:

Sometimes he also referred to uncle Tangent (who was married to aunty Alpha) and said that

so in a sense uncle Tangent of aunty Alpha was Sine divided by Cosine. To us that didn't make any sense, but Hypotenuse's father said that was how it always was.

Vectors

Matrices

References

- ↑ S. Cawood and P. McGee (2009). Microsoft XNA Game Studio Creator’s Guide. McGraw-Hill.

- ↑ Wikipedia:Sine

- ↑ Wikipedia:Cosine

Collision Detection

Collision detection is one of the basic components in a 3D game. It is important for a realistic appearance of the game, which needs fast and rugged collision detection algorithms. If you do not use some sort of collision detection in your game you are not able to check if there is a wall in front of your player or if your player is going to walk into another object.

Bounding Spheres

First we need to answer the question "What is a bounding sphere?" The bounding sphere means a ball which has nearly the same center point as the object which is enclosed by the ball. A bounding sphere is defined by its center point and its radius.

In collision detection the bounding spheres are often used for ball-shaped objects like cliffs, asteroids or space ships.

Let's take a look at what happens when two spheres are touching. The image shows , the radius of each sphere now also defines the distance its center to the opposite sphere's skin. The interspace between the centers would be equal to radius1 + radius2. If the distance would be greater, the two spheres would not touch but if it would be less, the spheres would intersect.

A feasible way to determine if a collision has occurred between two objects with bounding spheres you can simply find the distance between their centres and see if this is less than the sum of their bounding sphere radius.

Another way to use bounding spheres is to use the balance point of the object as the center point of the bounding sphere. Thereby you use the midpoint of all vertices as the centre of the bounding sphere. This algorithm gives you a more exact midpoint than the first way.

XNA Bounding Spheres

Microsofts XNA offers a model for you to use by developing your own game called "BoundingSphere". XNA provides this for you so that there is no need to calculate it. Models in XNA are made up of 1 or more meshes. When doing collisions you will want to have one sphere that borders the whole model. That means at model load time you will want to loop through all the meshes in your model and expand a main model sphere.

foreach (ModelMesh mesh in m_model.Meshes)

{

m_boundingSphere=BoundingSphere.CreateMerged(base.m_boundingSphere, mesh.BoundingSphere);

...

To see if two spheres have collided Xna provides us to use:

bool hasCollided=sphere.Intersects(otherSphere);

Bounding Rectangles or Bounding Box

In collision detection handling with rectangles you want to see whether two rectangular areas are in any way touching or overlapping each other. Therefor we need to use the bounding box. A bounding box is simply a box that encloses all the geometry of a 3D object. We can easily calculate one from a set of vertex by simply looping through all the vertices finding the smallest and biggest x, y and z values.

To create a bounding box around our model in model space you need to calculate the midpoint an the four corner point of the rectangle we want to enclose. Then you need to build a matrix and rotate the four point about the midpoint with the given rotation value. After that we need to go through all the vertices in the model keeping a track of the minimum and maximum x, y and z positions. This gives us two corners of the box from which all the other corners can be calculated.

XNA Bounding Box

Because each model is made from a number of mesh we need to calculate minimum and maximum values from the vertex positions for each mesh. The"ModelMesh" object in XNA is split into parts which provides access to the buffer which is keeping the data of the vertex (VertexBuffer) from which we can get a copy of the vertices using the GetData call.

public BoundingBox CalculateBoundingBox()

{

// Create variables to keep min and max xyz values for the model

Vector3 modelMax = new Vector3(float.MinValue, float.MinValue, float.MinValue);

Vector3 modelMin = new Vector3(float.MaxValue, float.MaxValue, float.MaxValue);

foreach (ModelMesh mesh in m_model.Meshes)

{

//Create variables to hold min and max xyz values for the mesh

Vector3 meshMax = new Vector3(float.MinValue, float.MinValue, float.MinValue);

Vector3 meshMin = new Vector3(float.MaxValue, float.MaxValue, float.MaxValue);

// There may be multiple parts in a mesh (different materials etc.) so loop through each

foreach (ModelMeshPart part in mesh.MeshParts)

{

// The stride is how big, in bytes, one vertex is in the vertex buffer

int stride = part.VertexBuffer.VertexDeclaration.VertexStride;

byte[] vertexData = new byte[stride * part.NumVertices];

part.VertexBuffer.GetData(part.VertexOffset * stride, vertexData, 0, part.NumVertices, 1); // fixed 13/4/11

// Find minimum and maximum xyz values for this mesh part

// We know the position will always be the first 3 float values of the vertex data

Vector3 vertPosition=new Vector3();

for (int ndx = 0; ndx < vertexData.Length; ndx += stride)

{

vertPosition.X= BitConverter.ToSingle(vertexData, ndx);

vertPosition.Y = BitConverter.ToSingle(vertexData, ndx + sizeof(float));

vertPosition.Z= BitConverter.ToSingle(vertexData, ndx + sizeof(float)*2);

// update our running values from this vertex

meshMin = Vector3.Min(meshMin, vertPosition);

meshMax = Vector3.Max(meshMax, vertPosition);

}

}

// transform by mesh bone transforms

meshMin = Vector3.Transform(meshMin, m_transforms[mesh.ParentBone.Index]);

meshMax = Vector3.Transform(meshMax, m_transforms[mesh.ParentBone.Index]);

// Expand model extents by the ones from this mesh

modelMin = Vector3.Min(modelMin, meshMin);

modelMax = Vector3.Max(modelMax, meshMax);

}

// Create and return the model bounding box

return new BoundingBox(modelMin, modelMax);

}

Terrain Collision

Collision detection with a terrain and an object is different than the collision between objects.

First of all you have to detect the coordinates of your current player (object). The height map of your terrain gives you a "gap value" which identifies the distance between two sequenced vertices. When dividing your coordinate position through those "gap values" you can detect the vertices at your position. You can get from your heightmapbuffer the 4 vertices squares where you are. Using these datas and your position in this square, you can calculate the best interspace to the terrain so that there is no collision with it.

Collision Performance

Sometimes collision detection slows down a game. It is the most time-consuming component in an application. Therefor there are data structures as quadtree and octtree.

Quadtree (2D)

A quadtree is a tree structure using a principle called ‘spatial locality’ to speed up the process of finding all possible collisions. Objects can only hit things close to them. To advance the performance you should avoid the testing again objects which are far away.

The easiest way to check for collision is to divide the area which is going to be checked into a consistent grid and declare each object with all intersecting grid cells. The quadtree tries to overcome this weakness by recursively splitting the collision space into smaller subregions. Every region is divided exactly into 4 smaller regions of the same size, so you end up having multiple grids with different resolutions, where the number of cells in a region goes go up by a power of two every time the resolution is increased. So every object resides in the cell (called quad node or quadrant) with the highest possible resolution. A search is made by starting at the object’s node and climb up to the root node.

Octtree (3D)

Octtrees work the same way as quadtree. It is used for collision detection in 3D areas.

References

Bounding Volumes and Collisions

Bounding Sphere Collision Detection

Author

sarah

Ballistics

If one thinks about ballistics the first couple of things that come to mind are guns and various deadly bullets. But especially in games ballistics can be concerned with the movement of any kind of projectile, from balls to bananas and from coconuts to rockets.

Ballisitcs help determine how these projectiles behave during movement and what their effects are[1]. This chapter will show and explain what a game programmer needs to know when programming anything related to projectiles.

Basic Physics

The movement of any projectile will be heavily influenced by its surroundings and the physical laws it should abide by. However, it is important to remember that games do not need to be set on earth and the experience on an alien planet may be completely different from what we know to be valid. Therefore the here listed formulas and explanations may need adjustment to what ever world your are intending to let projectiles move around in.

Mass and Weight

It is a common misunderstanding that mass is the same thing as weight. But while the weight of an object can change depending on the environment it is placed in, the mass of an object will stay the same[2]. Weight (denoted by W) is defined as a force that exist when gravity effects a mass[3]:

- , where g is the gravity present and m denotes the mass of the object

Velocity and Acceleration

Velocity describes the distance covered by an object through movement over a certain amount of time and the direction of such movement. It is the speed and direction at which your car travels along the Highway or at which a bullet whizzes through the air . Probably the most commonly seen units to denote speed are km/h and m/s. h and s represent a certain amount of time, where h stands for an hour and s for a second, km and m mean kilometer and meter, the distance traveled during this time interval. Velocity is defined by a vector which specifies the direction of movement and its absolute value is the speed.

Imagening a ball that is thrown straight up, it will not have the same speed through its whole flight. It will slow down until it reaches its apex, and then will speed up again. This is called acceleration. It is the rate by which the speed of an object changes over time. Newton's second Law of motion shows that acceleration depends on the force that is exercised on an object (e.g the force from the arm and hand that throw the ball) and the mass of such object (eg. the ball):

The acceleration of such object will be in the same direction as the applied force. The unit for acceleration is distance traveled over time squared, for example km/s².

Gravity

Universal gravitation is a force that takes effect between any two objects, drawing them torwards each other. This force depends on the objects' masses as well as their distance to each other.[4] The general formula to calculate this force looks like this:

,where and are the objects' masses, r is the distance and G the universal gravitational constant

The universal gravitational constant is:[5]

When talking about the gravity of earth, the acceleration experienced by a mass because of the existing attractive force, is meant. So gravity is nothing other than acceleration torwards the earth's mid point. This is why an object, dropped from a high building, will continue to be in free fall until it is stopped by another object, for example the ground. The gravity of earth is defined as follows:

, where g is the gravity of earth, m the earth's mass and r its radius

The earth's gravity on the surface equals approximately 9.8 meters/second².

Drag

Drag influences the velocity of objects moving through fluids and gases. This force is opposite to the direction of the object's movement and it hence reduces the object's speed over time. It depends on the objects mass and shape as well the density of the fluid. Because the flight path computation is usually simplified you might not end up needing the drag force. You should however consider the fluid and gases your projectile moves in and fiddle around with the scaling factors to get an appropriate flight path.

Projectile Movement

In games the world a player acts in is never really a hundred percent accurate representation of the real world. Therefore when programming movement of projectiles it is easier to simplify some of the physics while creating the illusion that the projectile is at least somewhat behaving like a human player would expect it to do. No matter if throwing a ball or shooting a torpedo under water there are two general and simplified patterns how projectiles move in games. These movements can be adapted and refined to match the expected movement of a specific projectile.

Projectile Class

It is advisable to make your own projectile class that includes all projectile specific variables like velocity as well as functions to manipulate and calculate the flight path. The class' basic framework could look something like this:

public class Projectile{

private Vector3 velocity; //stores the direction and speed of the projectile

public Vector3 pos; //current projectile position

private Vector3 prevPos; //previous projectile position

private float totalTimePassed; //time passed since start

public bool bmoving = false; //if the projectile is moving

///Constants

private const float GRAVITY = 9.8f;

public void Start(Vector3 direction,int speed, Vector3 startPos){

this.velocity = speed*Vector3.Normalize(direction);

this.pos = startPos; //in the beginning the current position is the start position

bmoving = true;

}

public void UpdateLinear(GameTime time){

if(bmoving) LinearFlight(time);

}

public void UpdateArching(GameTime time){

if(bmoving) ArchingFlight(time);

}

}

To start with something needs to trigger the movement of the projectile, for example the players mouse click. On that event you create a new instance of your projectile class and call Start() to launch the projectile. You will need to keep a reference to this object because the projectiles position is going to be updated every frame and the projectile is redrawn. The update is done be calling either the UpdateLinear or UpdateArching function, depending on the flight path that's wanted. The new position will have to be part of the transformation matrix that is used to draw the projectile in your game world.

In the Start method the direction vector is normalized to ensure that when multiplied by the speed the result is a velocity vector with the same direction as the initial vector and the absolute value of the desired speed. Remember that the direction vector passed to the Start function is the aim vector of whatever made the projectile move in the first place. Its absolute value can basically be anything when we assume the aim is changeable. Hence, this would not guarantee projectiles of the same kind moving at the same speed, nor would it allow for the player to decide on the force that is excersiced on the projectile before its release, changing its speed accordingly.

If your projectile is of a form that has an obvious front, end and sides it will become necessary to change the projectiles orientation according to its flight path. Following Euler's rotation theorem, vectors of a rotation matrix have to be unit vectors as well as orthogonal[6]. For a linear flight path we could simply take the normalized velocity vector as forward vector of the orientation matrix and construct the matrix's right and up vector accordingly. However, because the projectile's flight direction constantly changes when using an arching flight path it is easier to recalculate the forward vector each update by subtracting the projectile's current position from the position held an update earlier. To do so put the following function in your projectile class. Remember to call it before drawing the projectile and put the result matrix into the appropriate transformation matrix following I.S.R.O.T sequence. This sequence specifies the order by which to multiply the transform matrices, namely the Identiy Matrix, Scaling, Rotation, Orientation and Translation.

public Matrix ConstructOrientationMatrix(){

Matrix orientation = new Matrix();

// get orthogonal vectors dependent on the projectile's aim

Vector3 forward = pos - prevPos;

Vector3 right = Vector3.Cross(new Vector3(0,1,0),forward);

Vector3 up = Vector3.Cross(right,forward);

// normalize vectors, put them into 4x4 matrix for further transforms

orientation.Right = Vector3.Normalize(right);

orientation.Up = Vector3.Normalize(up);

orientation.Forward = Vector3.Normalize(forward);

orientation.M44 = 1;

return orientation;

}

Linear Flight

A linear flight is the movement along a straight line. This kind of movement might be observed when a ball is thrown straight and very fast. Obviously, even a ball like that will eventually fall to the ground if not stopped before. However, if it is for example caught quite early after leaving the throwers hand its flight path will look linear. To simplify this movement, acceleration and gravity are neglected and the velocity is the same at all time. The direction of movement is given by the velocity vector and is the same as the aim direction of the gun, hand etc.

If you have active projectiles in your game, the XNA Update function needs to call a function that updates the position for every active projectile object. The projectile's new position is calculate like this:

[7] , where timePassed is the time that has passed since the last update.

All this function needs as a parameter is the game time that has passed since the last update. Cawood and McGee suggest to scale this time by dividing it by 90 because otherwise the positions calculated for every frame will be to far apart.

private void LinearFlight(GameTime timePassed){

prevPos = pos;

pos = pos + velocity * ((float)timePassed.ElapsedGameTime.Milliseconds/90.0f);

}

Arching Flight

The arching flight path is a bit more realistic for most flying objects than the linear flight because it takes gravity into account. Remember that gravity is an acceleration. To calculate the position of a projectile with constant acceleration and at a certain point in time the formula is:

,where a is the acceleration and t the time that has passed

Because gravity pulls the projectile towards earth only the y-coordinate of your projectile will be effected. The projectile's ascenting rate will decrease over time until it stops its climb and turns to fall. However, the x and z coordinates remain uneffected by this and are calculated just the way they are with the linear flight path.

The following formula shows how to compute the y-position:

, where totalTimePassed is the time passed since the projectiles started

The minuend is equal to the linear flight formula, the subtrahend is the downwards acceleration due to gravity. It becomes obvious that the lower the projectile's speed and the further the velocity's direction is pointed towards the ground, the faster gravity will win over. This function will update the projectile's flight path:

private void ArchingFlight(GameTime timePassed){

prevPos = pos;

// accumulate overall time

totalTimePassed += (float)timePassed.ElapsedGameTime.Milliseconds/4096.0f ;

// flight path where y-coordinate is additionally effected by gravity

pos = pos + velocity * ((float)timePassed.ElapsedGameTime.Milliseconds/90.0f);

pos.Y = pos.Y - 0.5f * GRAVITY * totalTimePassed * totalTimePassed;

}

I scaled the time that is added to the overall time down again so the gravity does not take immediate effect. For a speed of 1 scaling by 4096 produces a nice flying path. Also, the compiler hopefully does something sensible and optimises the division by 4096 because it is a multiple of two. You might want to play around with the scaling factors. If your game is not set on earth you should also think about if the gravity constant is different.

Impact