Hadoop in adtech

- 1. Hadoop in adtech world Yuta Imai Solu,ons Engineer, Hortonworks © Hortonworks Inc. 2011 – 2015. All Rights Reserved

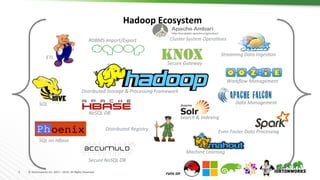

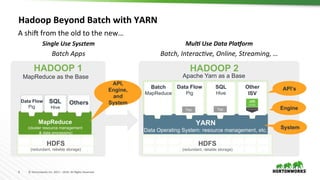

- 6. 6 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Hadoop Beyond Batch with YARN Single Use Sysztem Batch Apps Mul2 Use Data Pla6orm Batch, InteracFve, Online, Streaming, … A shiH from the old to the new… HADOOP 1 MapReduce (cluster resource management & data processing) Data Flow Pig SQL Hive Others API, Engine, and System YARN (Data Operating System: resource management, etc.) Data Flow Pig SQL Hive Other ISV Apache Yarn as a Base System Engine API’s 1 ° ° ° ° ° ° ° ° ° ° N HDFS (redundant, reliable storage) 1 ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° N HDFS (redundant, reliable storage) Batch MapReduce Tez Tez MapReduce as the Base HADOOP 2

- 7. 7 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Architecture Enabled by YARN A single set of data across the en,re cluster with mul,ple access methods using “zones” for processing 1 ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° n SQL Hive Interac,ve SQL Query for Analy,cs Pig Script-based ETL Algorithm executed in batch to rework data used by Hive and HBase consumers • Maximize compute resources to lower TCO • No standalone, silo’d clusters • Simple management & operations …all enabled by YARN Stream Processing Storm Iden,fy & act on real- ,me events NoSQL Hbase Accumulo Low-latency access serving up a web front end

- 8. 8 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Hadoop Workload EvoluBon Single Use System Batch Apps Mul2 Use Data Pla6orm Batch, InteracFve, Online, Streaming, … A shiH from the old to the new… Mul2 Use Pla6orm Data & Beyond HADOOP 1 YARN HADOOP 2 1 ° ° ° ° ° ° ° ° N HDFS (redundant, reliable storage) 1 ° ° ° ° ° ° N HDFS MapReduce HADOOP.Next YARN ‘ 1 ° ° ° ° ° ° ° ° ° ° ° ° N HDFS (redundant, reliable storage) DATA ACCESS APPS Docker MySQLMR2 Others (ISV Engines) Multiple (Script, SQL, NoSQL, …) MR2 Others (ISV Engines) Multiple (Script, SQL, NoSQL, …) Docker Tomcat Docker Other

- 10. 10 © Hortonworks Inc. 2011 – 2016. All Rights Reserved How Do You Operate a Hadoop Cluster? Apache™ Ambari is a pla:orm to provision, manage and monitor Hadoop clusters

- 11. 11 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Ambari Core Features and Extensibility Install & Configure Operate, Manage & Administer Develop OpBmize & Tune Developer Data Architect Ambari provides core services for operaBons, development and extensions points for both Extensibility Features Stacks, Blueprints & REST APIs Core Features Install Wizard & Web Web, Operator Views, Metrics & Alerts User Views User Views Views Framework & REST APIs Views Framework Views Framework How? Cluster Admin

- 13. 13 © Hortonworks Inc. 2011 – 2016. All Rights Reserved New User Views for DevOps Capacity Scheduler View Browse and manage YARN queues Tez View View informa,on related to Tez jobs that are execu,ng on the cluster

- 15. 15 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Apache Zeppelin • Web-based notebook for data engineers, data analysts and data scien,sts • Brings interac,ve data inges,on, data explora,on, visualiza,on, sharing and collabora,on features to Hadoop and Spark • Modern data science studio • Scala with Spark • Python with Spark • SparkSQL • Apache Hive, and more.

- 17. 17 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Hadoopの多くのユースケースはHive • 例えばWebサービスのアクセスレポートの作成などによく利⽤され、以下の 様なアーキテクチャが⾮常にメジャーだった。 • クエリにはそれなりに時間がかかることが多く、定期ジョブとして実⾏され ることが多かった。 Web Web Web Hadoop log log log

- 18. 18 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Hadoopの多くのユースケースはHive • 例えばWebサービスのアクセスレポートの作成などによく利⽤され、以下の 様なアーキテクチャが⾮常にメジャーだった。 • クエリにはそれなりに時間がかかることが多く、定期ジョブとして実⾏され ることが多かった。 Web Web Web Hadoop log log log ⼤量のデータに対して⼤きな処理をするために利⽤さ れるのがHadoopでありMapReduceだった。 MySQL Report UI

- 19. 19 © Hortonworks Inc. 2011 – 2016. All Rights Reserved SQL on ビッグデータを⾼速化する試み Hive(MapReduce)の速度はインタラクティブなクエリには不⼗分だった。 • Presto • Impala • Drill • Shark(今のSparkSQL)

- 21. 21 © Hortonworks Inc. 2011 – 2016. All Rights Reserved SQL on ビッグデータ - クラウドサービスの登場 • Amazon Redshift • Google BigQuery

- 22. 22 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Sub-second ショートクエリで 1秒以下のレスポンスを⽬指す à ~Hive1.2.1 – Tez – Cost Based Optimizer(CBO) – ORC File format – Vectorization à Hive2.0 – LLAP Stinger Initiative Hiveを100倍以上⾼速化 Already available on HDP! もちろんHive⾃⾝も⾼速化している

- 28. 28 © Hortonworks Inc. 2011 – 2016. All Rights Reserved 今では様々なところに利⽤されるHadoopエコシステム Web Web Web Hadoop HDFS log log log Report UI Ads server 配信DB レポート ⼊札やオプティマイゼー ションのモデル⽣成 リアルタイムトラッキング すべてのログの⻑期保存 リアルタイムなロ グ収集 ETLやもろもろのバッチ処理 Provision, Manage & Monitor Ambari Zookeeper Scheduling Oozie Load data and manage according to policy Provide layered approach to security through Authen,ca,on, Authoriza,on, Accoun,ng, and Data Protec,on SECURITY GOVERNANCE Deploy and effec,vely manage the plahorm ° ° ° ° ° ° ° ° ° ° ° ° ° ° ° Script Pig SQL Hive Java Scala Cascadin g Stream Storm Search Solr NoSQL HBase Accumulo BATCH, INTERACTIVE & REAL-TIME DATA ACCESS In- Memory Spark Others ISV Engines 1 ° ° ° ° ° ° ° ° ° ° ° ° ° ° YARN: Data Operating System (Cluster Resource Management) HDFS (Hadoop Distributed File System) Tez Slider Slider Tez Tez OPERATIONS

- 29. Key highlights in recent Hadoop evolution

- 30. 30 © Hortonworks Inc. 2011 – 2016. All Rights Reserved 昨今のHadoopの進化 Ã LLAP Ã HCatalog Stream Mutation API Ã Cloudbreak

- 31. 31 © Hortonworks Inc. 2011 – 2016. All Rights Reserved 昨今のHadoopの進化 Ã Hive – LLAP – ACID, HCatalog Stream Mutation API Ã Cloudbreak

- 33. 33 © Hortonworks Inc. 2011 – 2016. All Rights Reserved SQL evolution on Hadoop Capabilities Batch SQL OLAP / Cube Interactive SQL Sub-Second SQL ACID / MERGE Speed Feature Hive0.x (MapReduce) Hive1.2- (Tez, Vectorize, ORC, CBO) Hive2.0 (LLAP) Presto Impala Drill Spark SQL HAWQ MPP Kylin Druid Commercial Kyvos Insights AtScale Source

- 35. 35 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Hive 2 with LLAP: Architecture Overview Deep Storage HDFS S3 + Other HDFS Compa,ble Filesystems YARN Cluster LLAP Daemon Query Executors LLAP Daemon Query Executors LLAP Daemon Query Executors LLAP Daemon Query Executors Query Coordinators Coord- inator Coord- inator Coord- inator HiveServer2 (Query Endpoint) ODBC / JDBC SQL Queries In-Memory Cache (Shared Across All Users) MPP型に近いアーキテクチャを取りながら・・・ • キャッシュレイヤを持ったり • YARNによるスケール機能を利⽤したり • 低いレイテンシが必要ないクエリは通常のTezコンテナで処理できたりと いろいろおいしいどころどりな設計

- 36. 36 © Hortonworks Inc. 2011 – 2016. All Rights Reserved 0 5 10 15 20 25 30 35 40 45 50 0 50 100 150 200 250 Speedup (x Factor) Query Time(s) (Lower is Beper) Hive 2 with LLAP averages 26x faster than Hive 1 Hive 1 / Tez Time (s) Hive 2 / LLAP Time(s) Speedup (x Factor) Hive 2 with LLAP: 25+x Performance Boost

- 37. 37 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Hive ACID ProducBon-Ready with HDP 2.5 Ã Tested at mul,-TB scale using TPC-H benchmark. – Reliably ingest 400GB+ per day within a par,,on. – 10TB+ raw data in a single par,,on. – Simultaneous ingest, delete and query. Ã 70+ stabiliza,on improvements. Ã Supported: – SQL INSERT, UPDATE, DELETE. – Streaming API. Ã Future: SQL MERGE under development (HIVE-10924). Notable Improvements 0 MB 1 TB 1 TB 2 TB 2 TB 3 TB 3 TB 4 TB 4 TB 5 TB 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 10000 16/05/24 16/05/25 16/05/26 16/05/27 16/05/28 16/05/29 16/05/30 16/05/31 16/06/01 Time (s) Query Time versus Data Size Run,me for All Queries (s) Total Compressed Data 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 16/05/23 16/05/24 16/05/25 16/05/26 16/05/27 16/05/28 16/05/29 16/05/30 16/05/31 16/06/01 Time (s) Times for Inserts and Deletes ,me_insert_lineitem ,me_insert_orders ,me_delete_lineitem ,me_delete_orders

- 38. 38 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Hive ACID ProducBon-Ready with HDP 2.5 Ã Tested at mul,-TB scale using TPC-H benchmark. – Reliably ingest 400GB+ per day within a par,,on. – 10TB+ raw data in a single par,,on. – Simultaneous ingest, delete and query. Ã 70+ stabiliza,on improvements. Ã Supported: – SQL INSERT, UPDATE, DELETE. – Streaming API. Ã Future: SQL MERGE under development (HIVE-10924). Notable Improvements 0 MB 1 TB 1 TB 2 TB 2 TB 3 TB 3 TB 4 TB 4 TB 5 TB 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 10000 16/05/24 16/05/25 16/05/26 16/05/27 16/05/28 16/05/29 16/05/30 16/05/31 16/06/01 Time (s) Query Time versus Data Size Run,me for All Queries (s) Total Compressed Data 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 16/05/23 16/05/24 16/05/25 16/05/26 16/05/27 16/05/28 16/05/29 16/05/30 16/05/31 16/06/01 Time (s) Times for Inserts and Deletes ,me_insert_lineitem ,me_insert_orders ,me_delete_lineitem ,me_delete_orders 分析/集計⽤DBのつらいところとして、データをバッチ処理的に投⼊して やる必要があった。ストリームインサートができるのは⼤きなメリット。

- 39. 39 © Hortonworks Inc. 2011 – 2016. All Rights Reserved HCatalog Stream Mutation API ORC ORC ORC ORC ORC ORC HDFS Table Bucket Bucket Bucket ORC

- 40. 40 © Hortonworks Inc. 2011 – 2016. All Rights Reserved 昨今のHadoopの進化 Ã Hive – LLAP – ACID, HCatalog Stream Mutation API Ã Cloudbreak

- 41. 41 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Cloudbreak BI / AnalyBcs (Hive) IoT Apps (Storm, HBase, Hive) Dev / Test (all HDP services) Data Science (Spark) Cloudbreak 1. Pick a Blueprint 2. Choose a Cloud 3. Launch HDP! Example Ambari Blueprints: IoT Apps, BI / Analy,cs, Data Science, Dev / Test クラウドへのHDPデプロイの実⾏を容易に

- 42. 42 © Hortonworks Inc. 2011 – 2016. All Rights Reserved 昨今のHadoopの進化:まとめると・・・ Ã Hive – LLAP – ACID, HCatalog Stream Mutation API Ã Cloudbreak